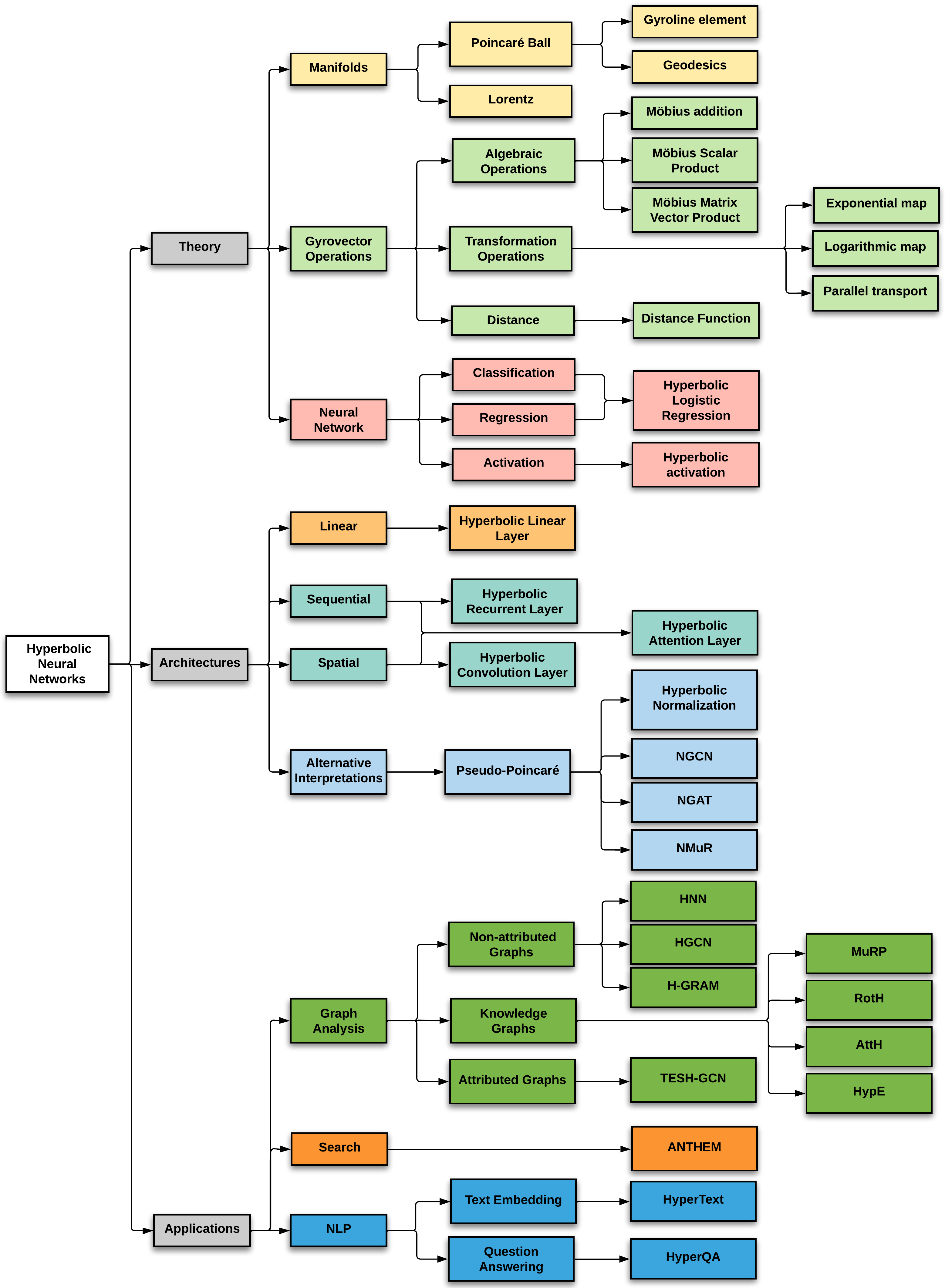

Hyperbolic Networks:

Theory, Architectures and Applications

Tutorial @ TheWebconf 2022 · Online · April 25, 2022 8:00 am ET.

Code Library: GraphZoo: Facilitating learning, using, and designing graph processing pipelines

Graphs are ubiquitous data-structures that are widely-used in a number of data storage scenarios, including social networks, recommender systems, knowledge graphs and e-commerce. This has led to a rise of GNN architectures to analyze and encode information from the graphs for better performance in downstream tasks. While preliminary research in the domain of graph analysis was driven by neural architectures, recent studies has revealed important properties unique to graph datasets such as hierarchies and global structures. This has driven research into hyperbolic space due to their ability to effectively encode the inherent hierarchy present in graph datasets. The research has also been subsequently applied to other domains such as NLP and computer vision to get formidable results. However, the major challenge to further growth is the obscurity of hyperbolic networks and a better comprehension of the necessary algebraic operations needed to broaden the application to different neural network architectures. In this tutorial, we aim to introduce researchers and practitioners in the web domain to the hyperbolic equivariants of the Euclidean operations that are necessary to tackle their application to neural network architectures. Additionally, we describe the popular hyperbolic variants of GNN architectures such as recurrent networks, convolution networks and attention networks and explain their implementation, in contrast to the Euclidean counterparts. Furthermore, we also motivate our tutorial through existing applications in the areas of graph analysis, knowledge graph reasoning, product search, NLP, and computer vision and compare the performance gains to the Euclidean counterparts.